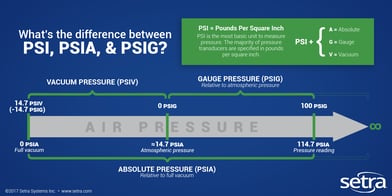

Absolute pressure is the pressure measured relative to a perfect vacuum, which is considered absolute zero pressure. This means it includes the atmospheric pressure in addition to the pressure of the system being measured.

An incredibly diverse array of applications require the measurement of air pressure. Depending on the application, users need to be able to interpret pressure readings in different ways and use appropriate units to reflect those readings accurately.

Absolute Pressure Defined

Absolute pressure is measured relative to a full vacuum. In contrast, the pressure that is measured against atmospheric pressure (also known as barometric pressure) is called gauge pressure. A full vacuum has an absolute pressure reading of 0 PSIA and the average barometric pressure at sea level is ~14.7 PSIA.

When measuring gauge pressure, current atmospheric pressure is the baseline and is therefore read as 0 PSIG. Any pressure readings taken by a transducer will be relative to that reference, which can change with variations in temperature or altitude. Full vacuum is -14.7 PSIG; or in terms of vacuum pressure, it can also be rendered as +14.7 PSIV.

Where is absolute pressure measured?

Confusingly enough, pressure transducers used to measure barometric pressure for weather forecasting purposes are measuring the absolute pressure of the surrounding area. If the intended application uses gauge pressure transducers instead of absolute pressure transducers, readings would constantly be 0.

Absolute pressure transducers are also used in semiconductor manufacturing applications, specifically in the storage and delivery of toxic arsine and phosphine gases. Because atmospheric conditions can fluctuate, it is imperative that these systems are accurate and use a reference (full vacuum) that is completely static.

CLICK HERE to learn more about the differences between absolute and gauge sensors.